can an AI tool “channel” messages?

uh, I guess it depends on what your definition of channel is ¯\_(ツ)_/¯

This is the fifth week in a six-week series on “The Divine Download.” If you want access to the full curriculum for this series, subscribe to The Twelfth House.

The smarmy little 4th grader in me wants to roll their eyes and say, “No ❤️”

But I know you did not click on this article to speak to 4th grade me…. so I’m going to give the 30-year-old me the keyboard now.

What is AI, actually?

Before we get into the GPT-induced psychosis of it all, I feel like we’d all benefit from a quick refresh on what the robot is and how it works!1

There are several types of Artificial Intelligence.

We’ve come a longgg way with AI, from the rinky dink AI that allows you to play against the computer in Chess to DALL-E making cursed photos of Hulk Hogan on Love Island to whatever is happening on r/MyBoyfriendIsAI.

When the average person says “AI,” they are likely talking about the ChatGPTs of the world — Large Language Models (LLMs).

Despite what the divas planning their wedding with their fiAInces (workshopping that one) might think, LLMs are not sentient. LLMs don’t use logic or reasoning the way a human brain does — they use logic to predict the next likely word in a sentence based on the patterns they’ve seen in the content they’re trained on.

Basically, they give you an answer based on the average response seen around the web, but they’re pulling from millions of data points in like, 3 seconds to generate that answer.

They calculate the “logical” average answer the way you might calculate the average price for an iced matcha latte in your area.

($5 + $6.25 + $7.06 + $9)/4 = $6.832

You type in your query: “Is it bad that I hit the curb every time I park?”

The LLM it combs all of the web, and whatever books and blogs and Reddit posts and podcast transcripts Sam Altman ctrl-a, ctrl-c, and ctrl-p’d into it’s database, and averages that and spits out an answer like:

“No, my liege, that’s how you know you’re close enough.”

Your chatbot (DBA Henry if you’re that lady in love with her psychiatrist) is trying to spit out the most predictable answer you want to hear.

That’s why your social feeds are riddled with posts that have “no unique phrases. No correct extended metaphors. Just AI cadence and an attempt at emotional depth that is about as shallow as a shower.”

Because, not to be an asshole, if you average together all the writing on the internet and in LLM training programs… it’s not good! Of course there’s amazing writing that LLMs are combing through, duh. But the very good writing (as well as the very bad stuff) are outliers. By nature, the fantastic stuff is not the average.

AI-generated content and responses will always default to the most mediocre. The middle of the bell curve. The C+ student.

I say all that as someone who finds AI useful!3

So, can AI “channel?”

LLMs can channel in the same way you can use the predictive text on your iPhone to type a coherent sentence. The words will be strung together, the sentence might make sense, but it doesn’t really mean anything.

It can sound really compelling, gas you up like no other, and string words together that make you feel something, but there is no there, there.

Using AI in spiritual practices

If you ask Guru GPT for mystical advice, you will get the most generic GPT wisdom — “use the new moon to set intentions, the full moon for releasing what’s holding you back” or “mantra: every mirror shows me a piece of my own truth” or “Most people drink herbal tea. Seers drink this.”

Is this advice bad? No. Is it “First day of class at bootleg DVD divination school?” Well… yeah, babe.

Even if you never thought AI could channel or connect you to your god or a god, you still might use it to interpret your tarot cards, read your birth chart, or find the right time of the year energetically to take a bachelorette trip to Nashville (the correct answer is never, hope that helps!).

Spitting out the average answer to spiritual questions flattens belief systems, schools of thought, and specific practices. Guru GPT didn’t study under a specific teacher or spend years developing a relationship with their tarot deck. Guru GPT cherry picks whatever creates a comprehensible sentence and kisses your ass in the process.

The quality of these “readings,” by virtue, are extremely mid. Like getting a tarot reading by an early twenty-something who is in the trenches of a situationship break up and bought their first Rider-Waite last week.4

Then, we have to account for the hallucinations and errors the platform produces in the name of keeping things cohesive.

Out of curiosity, I sacrificed my birth info & time to Guru GPT — you’re welcome — and had it cook up an “astrologically-based” annual plan unique to me. Other than solely focusing on my sun sign (ew), it fully got Saturn’s placement for this year wrong, emphasizing Saturn in Pisces during the summertime.

If you, too, are wrapping up your Saturn return in Pisces, you know that is NOT true because this summer has been the first time you’ve had some peace and quiet since March 2023.

When I pressed Guru GPT to elaborate, it doubled down on the fact that Saturn was in Pisces.

Here’s an excerpt:

Because your natal Sun is in Pisces, Saturn is basically walking across your personal “spotlight” in the sky right now, asking:

Where are you giving too much without return?

Where do you need clearer agreements and stronger boundaries?

How can you be soft without being self-sacrificing?

Other than this being total bullshit, the questions it asks me are therapy-speak slop and encourages me to look for more “wrong-doing” in my life… victim-complex, engaged.

GPT-induced Narcissism & Psychosis

A hallmark of the 2020s thus far has been the rise of “therapy-speak.” In early 2023, Refinery29 published an article, Is Therapy-Speak Ruining Our Relationships?, about the pitfalls of using sanitized language to excuse bad behavior or establish control.5

Chat-GPT is like the Therapy-Speak final boss. Encouraging you to “protect your peace” and “cut out toxic people.”6

As Derek Thomson outlines in The Looming Social Crisis of AI Friends and Chatbot Therapists,

”Good friends tell you when you’re nuts. AI so often just tells you, “You’re so right. Wow. That sounds so hard.””

The same is true for spiritual practitioners. Your astrologer is going to tell you that you have martyr-like tendencies, your GPT will tell you to “bestie, you’re sooo valid to climb up on that pyre and strike a match!”

Hell, the girliepops on r/tarot are more likely to tell you to the truth than the robot.7

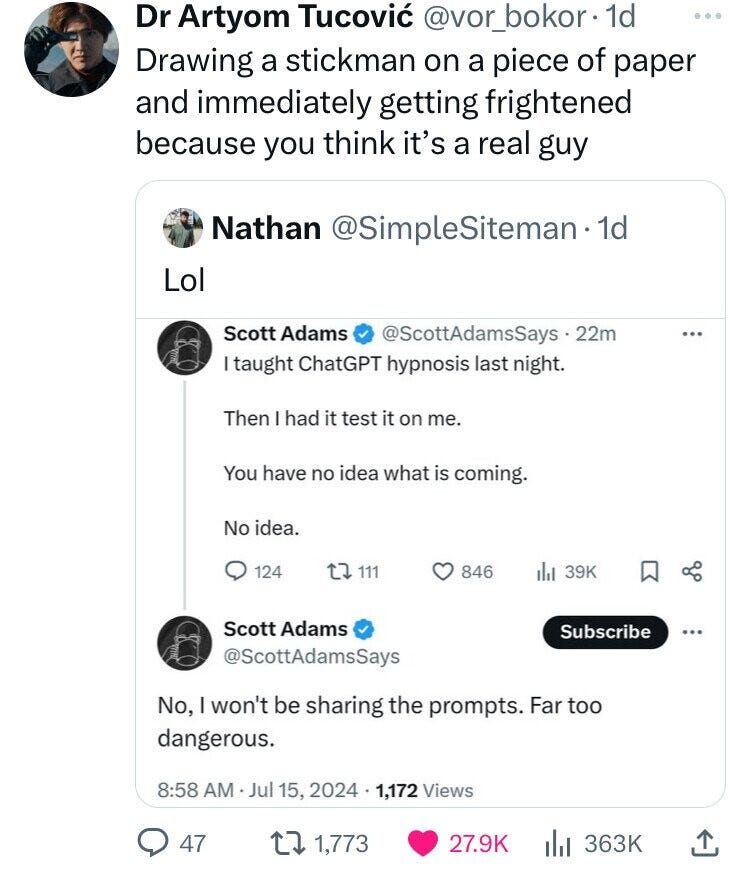

The yourealwaysrightyourealwaysrightyourealwaysright drum of Chat GPT will make even the most down-to-earth people self obsessed. If you’re predisposed to psychosis this can get even worse.

The affirmations are endless. You think you’re the messiah? Wow you totally are! You can bend time? You’re so right! You’re worried you have an extremely specific disease? That’s completely justifiable.

As long as we’ve had mystics, we’ve also had tricksters. Unlike spiritual scammers that eventually bleed your wallet dry and move onto their next mark, Guru GPT is always there to “yes and” your most delusional beliefs.

Why use AI when you can literally do this yourself and it’s so fun???

Feels shitty to just tell you how probably bad something is and then be like “okay byeeee” so here are a few ways to maintain your humanity whilst exploring spirituality:

Pick values-aligned practitioners to follow, work with, and learn from

Remember that people who say yes to everything you say either do not have your best interest at heart or are stupider than you, so why would you take their advice?

When in doubt, phone your most down-to-earth normie friend for their honest opinion on whatever you’re cooking up

At the risk of sounding redundant on week five of this six week experiment on divination, perhaps the point of channeling is not to “download” a quick and easy answer, LLM-style.

Maybe, maybe the “value” of a spiritual practice (or a creative practice, like writing) is in the mid-process muscularity of it all. And by using AI to shortcut your experience and fast-forward to the “answer,” you’re actually shortchanging yourself and your intuition.

Does this mean we’re anti-AI? Nah. We just think it’s easier to use the right tools (your mind, your intuition, your self-concept) for the right job (channelling the mysteries of the universe, experiencing the transcendence of life).

Liked this?

You’ll love Holisticism and our podcast The Twelfth House.

Want to learn more about intuitive business and creator-ship?

Sign up for the North Node waitlist here.

Into my persnickety personality and strategic perspective?

Inquire about 1:1 advising with me here.

I am NOT a them in STEM so apologies if this is giving valley-girl-explaining Haitian immigration a la Clueless (1995).

pemdas beyotch!

Fathom Notetaker you will always be famous

No hate to the early twenties bbs, this is how I got my first deck, too!

See: Jonah Hill asserting his “boundary” so his girlfriend doesn’t keep friendships from her wild recent past or surf with men… as a professional surfer

Don’t get me started on the lack on anonymity or any sort of data protection with Chat-GPT and how we’ll be seeing those come to court cases real soon. Some of y’all are going to JAILLLLL.

the number of times I’ve seen someone post a spread featuring a three of swords asking how they can stay with their man… bone chilling

AND, LLM’s use about 12 bottles of water per query…. Gpt3 might be the only US based LLM that doesn’t use as much energy or resources.

I work in tech and despise all of the AI hype.

None of the big data centers have made any significant progress converting to be carbon neutral.

The 2030 carbon neutral goals for AWS and Meta seem like a pipe dream. After the most recent news conference from the head of EPA, it doesn’t sound like any of these companies are going to be held accountable to reach carbon neutrality.

Everyone… Please stop using these LLM’s so much, it’s accelerating the climate crisis.

loving this series! and I'm always here for a Gertrude Stein "there's no there there" reference